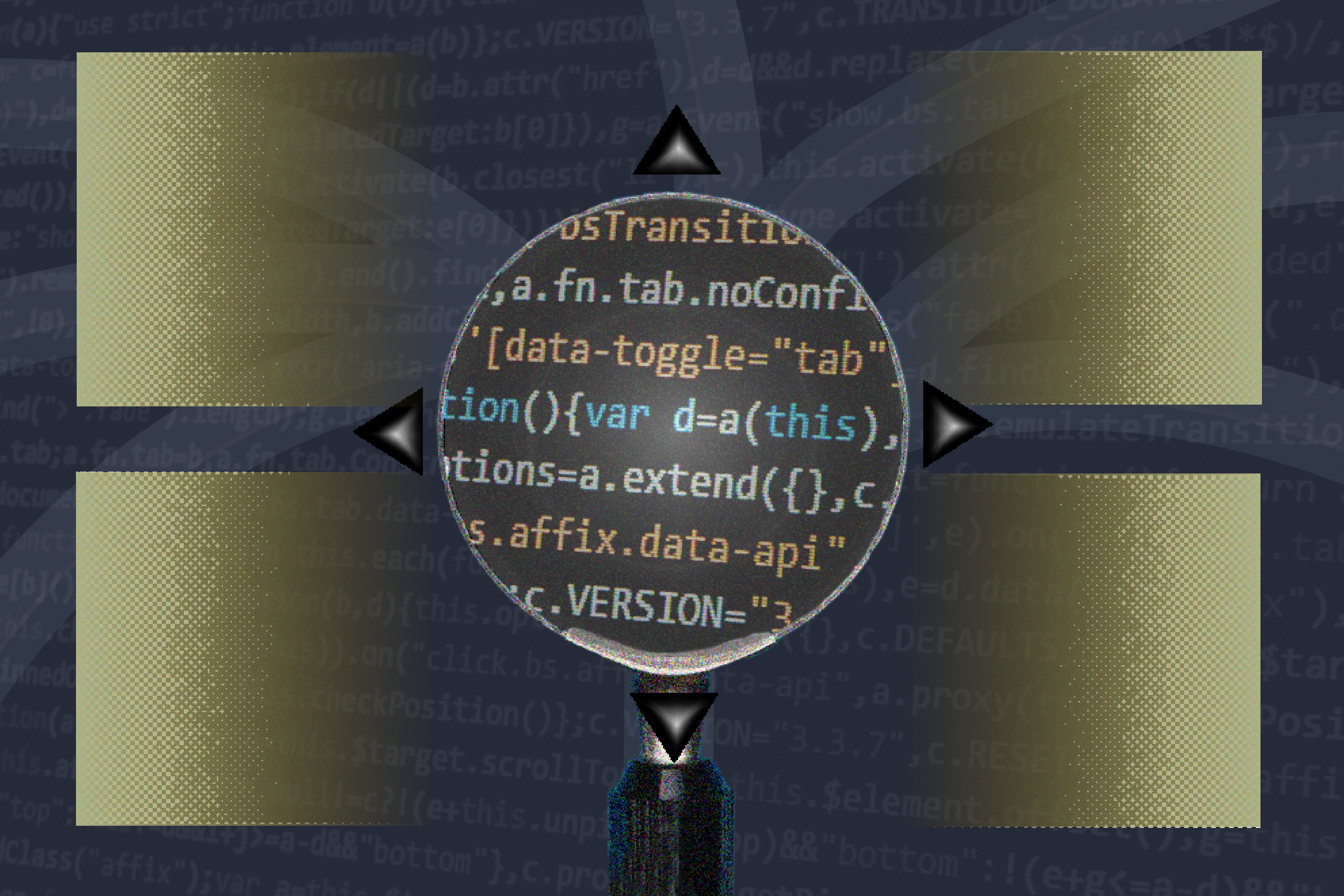

Exposing biases, moods, personalities, and abstract concepts hidden in large language models

MIT News • 2/19/2026

Summary

MIT has developed a new method aimed at exposing biases, moods, personalities, and abstract concepts hidden in large language models (LLMs). This method could help root out vulnerabilities and improve the safety and performance of LLMs.

Advertisement

Breaking Similar stories

Open Thread

Reason Magazine • 1 day ago

Global Cooperation in a Time of Geopolitical Disruption

Project Syndicate • Today

MIT faculty, alumni named 2026 Sloan Research Fellows

MIT News • Today

Mamdani Discovers Common Sense on Homeless Sweeps

City Journal • Today

For leftists, philanthropy is often simply an expression of plutocratic power. How then to make sense of the surprisingly radical Garland Fund?

Arts & Letters Daily • Today

“Rather than being a footnote to premodern folly, the Rosicrucian affair turns out to sit at the narrative center of the modern world”

Arts & Letters Daily • Today

DHS Spokesperson Tricia McLaughlin Goes Out at the Top of Her Game

Reason Magazine • Today

Babies Are Exposed to More Forever Chemicals in Utero Than Previously Thought

Nautilus • Today

The Haiku of Richard Wright

3 Quarks Daily • 1 day ago

Trump Administration May Grant Asylum to Turkish National Who Burned a Quran in the U.K.

Reason Magazine • Today

Survived Similar stories

Anti-Lindy Similar stories

Helping AI agents search to get the best results out of large language models

MIT News • 2 weeks ago

AI models were given four weeks of therapy: the results worried researchers

Nature News • 1+ months

Distinct AI Models Seem To Converge On How They Encode Reality

Quanta Magazine • 1+ months

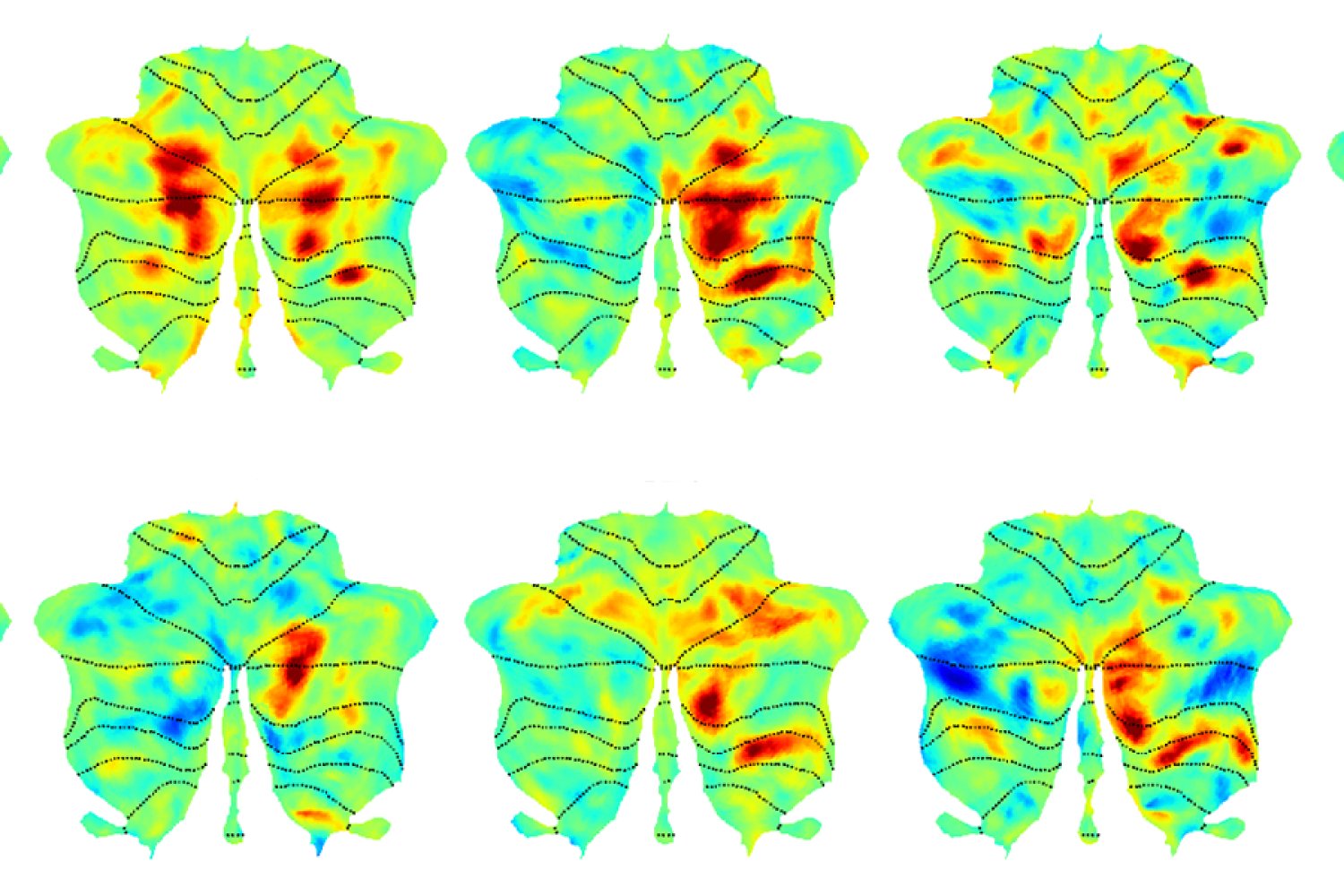

A satellite language network in the brain

MIT News • 2 weeks ago

Using AI, Mathematicians Find Hidden Glitches in Fluid Equations

Quanta Magazine • 1+ months

These Bizarre, Centuries-Old Greenland Sharks May Have a Hidden Longevity Superpower

Scientific American Ideas • 1+ months

The Names of the Game: Moral Language and the Killing of Alex Pretti

3 Quarks Daily • 1 week ago

This Hidden Brain Region Could Help You Stay Resilient in Old Age

Nautilus • 1+ months

The Hidden Costs of L.A.’s “Mansion Tax”

City Journal • 1 week ago

A Hidden Lesson of the Minnesota Welfare Scandal

The Atlantic Ideas • 2 weeks ago